Interplay of the EU AI Act and GDPR

Published on 25th March 2025

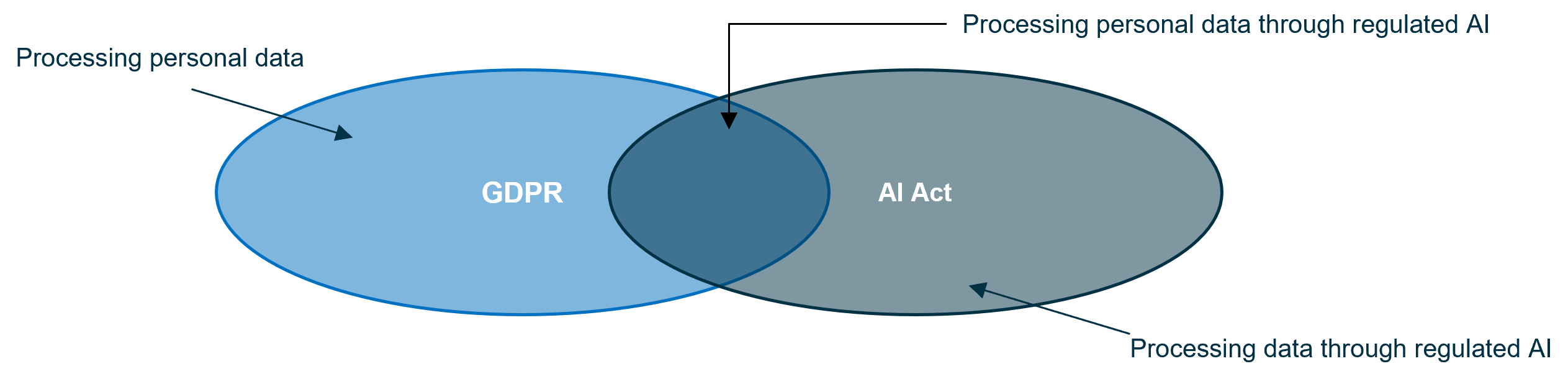

In certain scenarios, there will be an overlap between the EU Artificial Intelligence Act (“AI Act”) and the EU General Data Protection Regulation (“GDPR”) when personal data is processed through regulated AI systems. To illustrate such overlaps in these scenarios, the table below gives an overview of the key requirements under the AI Act applicable to Providers* and Deployers*1 relating to high-risk AI systems* and links each of those requirements to a correlating requirement under GDPR.

This overview shall support organizations to identify synergies through existing processes and protocols that possibly could be leveraged for AI compliance measures, to align internal workstreams, and to enable the internal privacy organization to identify relevant touchpoints with the AI compliance organization.

This document does not address any interplays of the AI Act with other areas of law, such as product regulator, IP, cyber security, digital regulation, commercial, or labor & employment.

1Non-public bodies and institutions only

Areas of overlap between GDPR and AI Act

Key Definitions

- Provider: means an organization that develops an AI system (or has an AI system developed) and places it on the EU market or puts the AI system into service under its own name or trademark, whether for payment or free of charge

- Deployer: means and organization using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity

- High-risk AI systems: may include AI systems intended to be used (i) for emotion recognition, (ii) for recruitment or selection of individuals, in particular to place targeted job advertisements, to analyze and filter job applications, and to evaluate candidates, or (iii) to make decisions affecting employees (e.g., term, termination of employment, promotions, task allocation, performance and behavior evaluation)

Interplay AI Act and GDPR

| AI Act Requirements relating to high-risk AI systems | Interplay with GDPR requirements | Responsible actor under the AI Act: | |

| Provider | Deployer | ||

Extraterritorial application (Art. 2 AI Act):

|

| ||

AI literacy (Art. 4 AI Act)

|

| ||

Risk management system (Art. 9 AI Act)

|

| ||

Data and Data Governance (Art. 10, 26 (3) AI Act)

|

| ||

Transparency towards deployer (Art. 13 AI Act)

|

| ||

Transparency towards affected individuals (Art. 50, 26 (11), 86 AI Act)

|

| ||

Human Oversight (Art. 14, 26 AI Act)

|

| ||

Accuracy, robustness and cybersecurity (Art. 15 AI Act)

|

| ||

Quality management system (Art. 17 AI Act)

|

| ||

Recording of log-files (Art. 12 AI Act)

|

| ||

Documentation Keeping (Art. 18, 26 AI Act)

|

| ||

Corrective actions and duty of information (Art. 20, 26 (5) AI Act)

|

| ||

Post-market monitoring (Art. 72, 26 (5) AI Act)

|

| ||

Reporting of serious incidents (Art. 73, 26 (5) AI Act)

|

| ||

Appointment of representative in the EU (Art. 22 (1) AI Act)

|

| ||

Fundamental Rights Impact Assessment (Art 27 AI Act)

|

| ||

Technical documentation (Art. 11 AI Act)

|

| ||

TOM to use AI system in accordance with instructions (Art. 26 (1) AI Act)

|

| ||

Registration (Art. 49 AI Act)

|

| ||

Conformity assessments and EU declaration of conformity (Art. 43, 47 AI Act)

| |||

Cooperation with regulator (Art. 21, 26 (12) AI Act)

|

| ||

Download the complete document as pdf here.